8 minutes

Building Enterprise Grade AI Capability - A Structured View

Introduction

Building enterprise-grade AI capability isn’t a sprint. Most organisations that have dabbled with AI focus on the shiny bit: the model, the prompt, the first working demo. What separates experimental play from sustainable enterprise capability is everything else - the scaffolding, the oversight, the systems thinking.

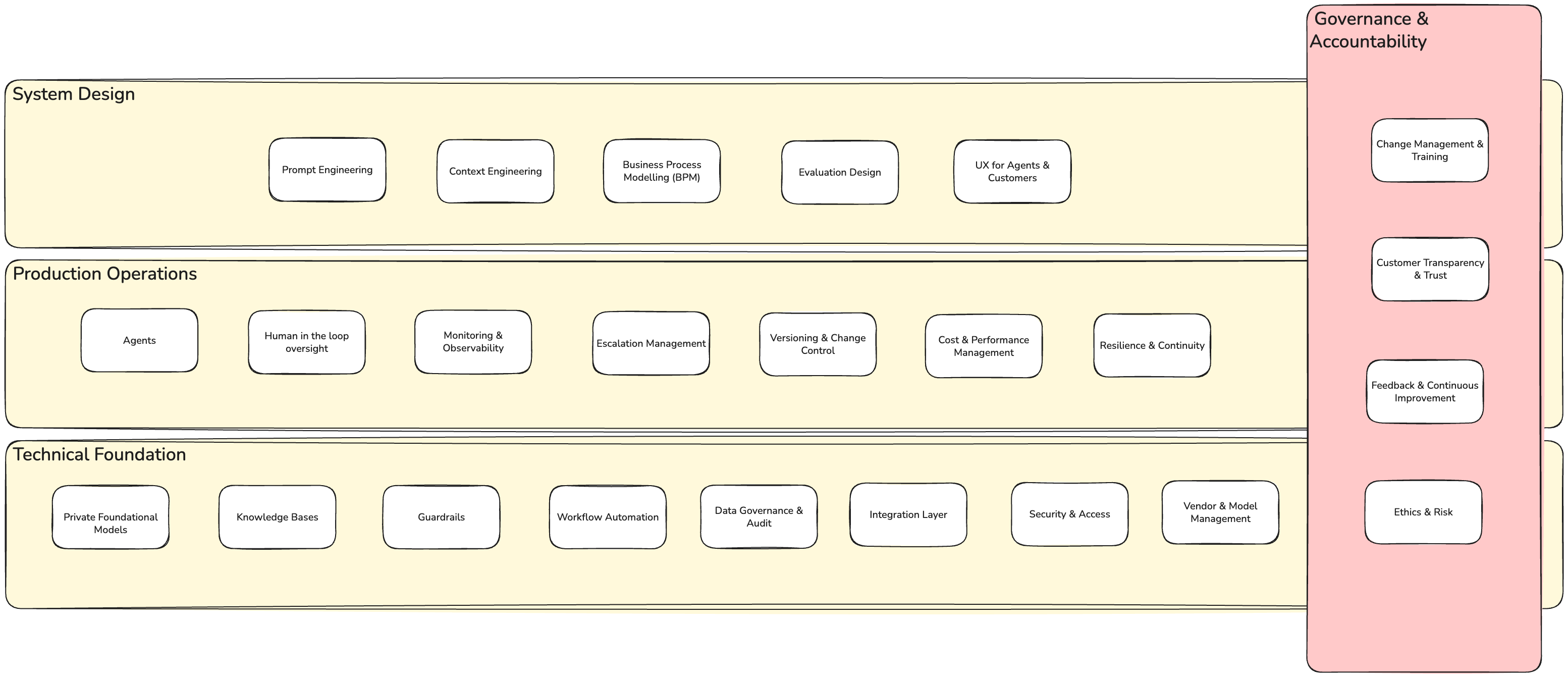

The diagram above maps that destination. It’s not a technology stack. It’s a capability framework - a vision of what mature, production-grade AI systems actually require.

But here’s the thing: you don’t build this all at once, and you don’t build it in the order it’s presented here.

This article describes the north star: what a complete, sustainable system looks like. If you’re running AI in anger - processing real workflows, making consequential decisions - this is what you’re aiming for. The three layers aren’t sequential; they’re interdependent. But they’re also the components of something that works.

In a follow-up piece, we’ll map the actual journey: which prerequisites genuinely block you, which ones you learn by doing, and how experimentation becomes your safe way to unlock these capabilities layer by layer.

For now: the destination. Read this knowing you won’t need all of it immediately. Read it knowing you probably need more of it than you think.

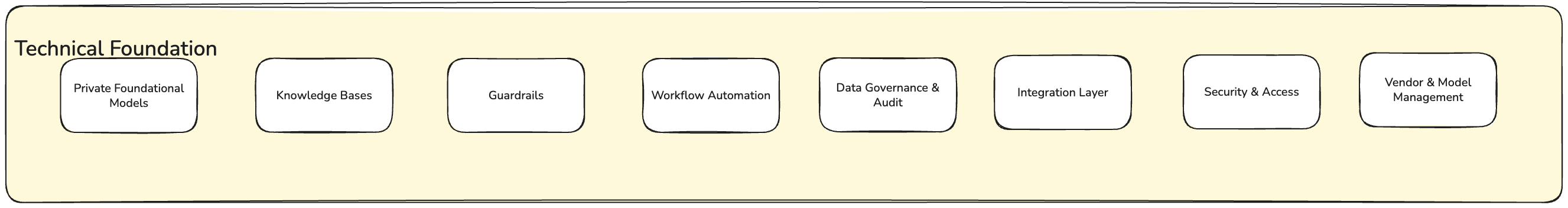

Technical Foundation: The Unglamorous Base Layer

These are the systems no one gets excited about. They’re also the ones that separate a proof of concept from something you can actually run.

Private Foundational Models

Start here if you’re serious. Public models are great for exploration, but enterprise AI requires control: over behaviour, over data, over drift over time. A private model - whether fine-tuned, retrieval-augmented, or both - is an investment that buys you independence, auditability, and the ability to say “no” to vendors when needed.

Knowledge Bases & Data Governance

AI is only as good as what it can see. A knowledge base isn’t just a database you connect; it’s a curated, versioned collection of your company’s decision-making. It requires governance: who owns it, how it’s updated, how stale entries are retired, how lineage is tracked. Without this, your AI becomes a confidence machine that sounds right.

Guardrails

This is about boundaries, not restrictions. Guardrails define what the AI can and cannot do: which APIs it can call, which fields it can modify, when it must escalate to a human. Well-designed guardrails make human oversight workable instead of impossible.

Workflow Automation

Not everything the AI does is conversational. Much of it is orchestration: routing, batching, calling systems, waiting for approvals. This layer handles the boring glue that makes agentic workflows actually run.

Integration Layer

Your AI system doesn’t exist in isolation. It needs to talk to your CRM, your finance system, your knowledge repository. A clean integration layer - with error handling, retry logic, and rate limiting - is what keeps the whole thing from becoming fragile.

Security & Access Controls

If your AI can read anything, it can leak anything. Proper security isn’t paranoia; it’s the base requirement. This includes model access, data access, audit trails, and the ability to lock things down when needed.

Vendor & Model Management

You’ll likely use multiple models (one for reasoning, one for speed, one for embeddings). This layer manages versioning, switching, licensing, and the risk of vendor lock-in.

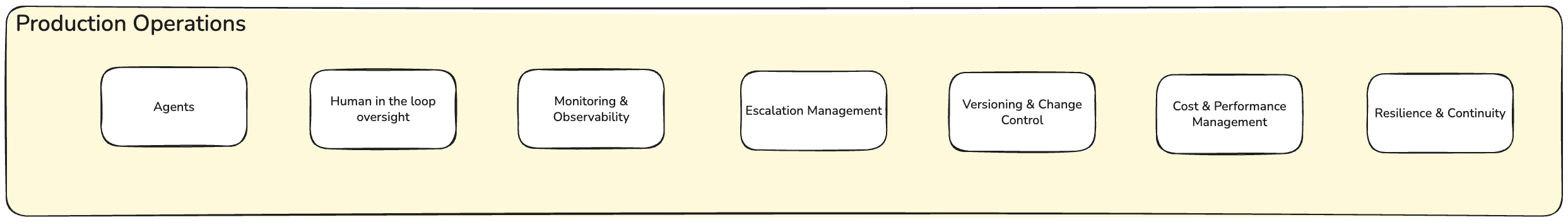

Production Operations: Running the Machine

Now you have the foundation. This layer is about making the system reliable, observable, and controllable in production.

Agents

The actual autonomous systems doing the work. Not just chatbots - agents that take action, make decisions within guardrails, and know when to ask for help. Good agent design starts with clear intent and narrow domains.

Human in the Loop Oversight

This is the heartbeat. Not all decisions should be automated. The question isn’t “can we automate this?” but “should we?”. Some workflows benefit from human review before execution; others need review after. Either way, someone is accountable. Design the loop to make that review workable - clear summaries, flagged risks, easy escalation.

Monitoring & Observability

You can’t manage what you can’t see. This means logging not just that a task ran, but what the AI reasoned, why it chose a particular path, what it cost, where it was wrong. Observability is how you spot drift, bias, and cost creep before they become crises.

Escalation Management

Not everything the AI tries will work. APIs fail, policies change, edge cases emerge. Escalation isn’t failure - it’s design. Clear escalation paths (to systems, to humans, to humans with specific skills) keep your AI from silently failing or getting stuck in loops.

Versioning & Change Control

Your prompts, your guardrails, your models - they all change. You need to be able to roll back, compare, audit who changed what and when. This sounds like engineering hygiene. It’s actually how you keep people’s trust.

Cost & Performance Management

Every API call costs something. Every decision takes time. Without visibility into cost and latency by workflow and by user, your AI system will slowly drift into inefficiency or your bill will silently triple. This layer keeps both accountable.

Resilience & Continuity

What happens when your AI supplier has an outage? When a downstream system is down? When a model breaks? Resilience means fallback plans, graceful degradation, and the ability to serve reduced functionality rather than nothing.

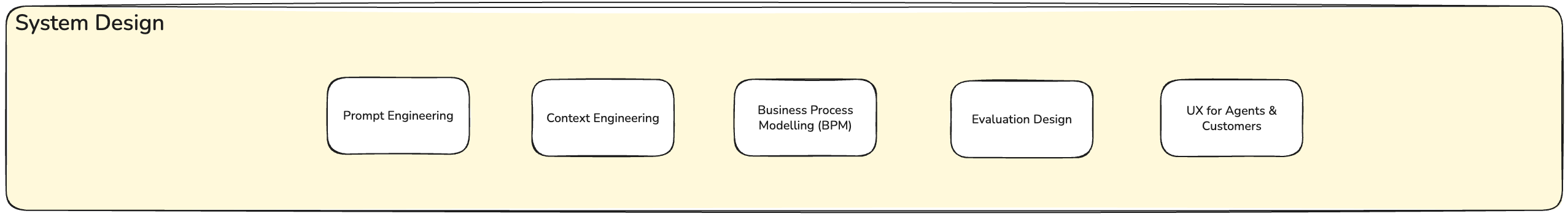

System Design: Shaping Intent

The foundation is solid, the machine is running. Now: what does it actually do?

Prompt Engineering

This isn’t magic incantations. It’s the discipline of translating business intent into clear, testable instructions. Good prompt engineering is boring: it’s specific, it’s versioned, it’s tested against real scenarios. It’s also where most of the value often sits.

Context Engineering

Prompts work in context. Context engineering is the art of giving your AI just enough information to be smart without so much that it gets confused or slow. It’s about what goes into the knowledge base, what goes into the system prompt, what goes into the query, and how those change based on the problem.

Business Process Modelling

Before you automate a process, you need to understand it. This isn’t flowcharting for its own sake; it’s about finding the decision points, the exceptions, the hand-offs. Often the real value comes from redesigning the process while you’re learning it for automation.

Evaluation Design

You need to know whether your AI is doing the right thing. This means defining metrics before you build, understanding your baseline, knowing when to retrain or recalibrate. Evaluation design is often neglected; it’s also where bias and drift are caught early.

UX for Agents & Customers

Your AI isn’t an invisible backend system; humans interact with it. They need to understand what it’s doing, why, and how to correct it. Good UX for agents is clarity: transparent reasoning, clear options to override, easy reporting when something seems wrong.

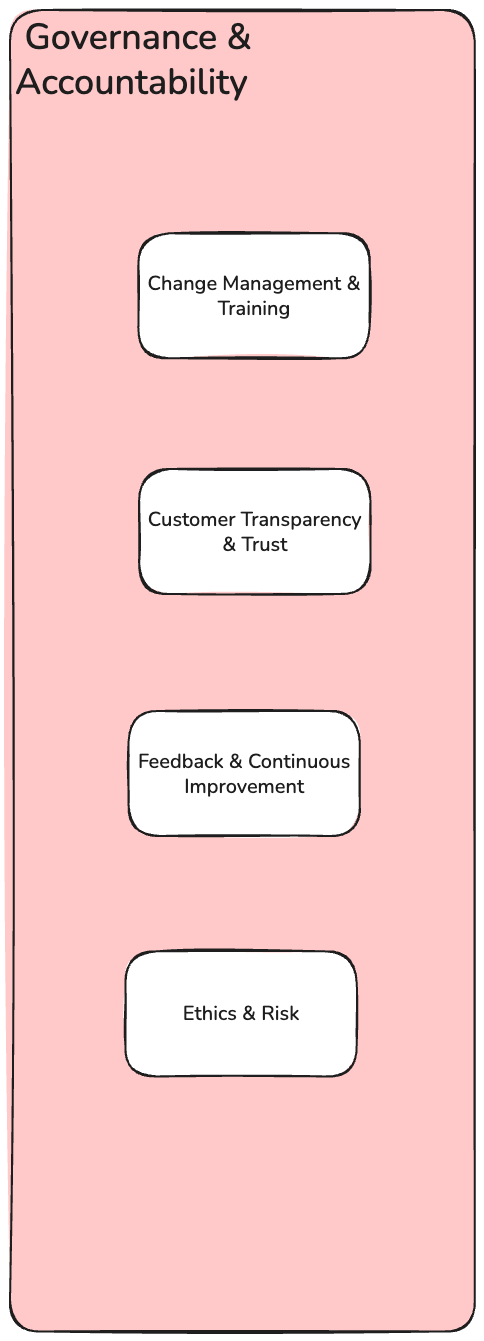

Governance & Accountability: The Organisational Layer

Technology alone doesn’t work. These four concerns run through everything.

Change Management & Training

Your AI will change how people work. If you don’t actively manage that transition - helping people understand what they’re doing differently, why, and how to adapt - you’ll see resistance, workarounds, and wasted capability. Training isn’t a one-time event; it’s ongoing as the system evolves.

Customer Transparency & Trust

People need to know when they’re dealing with an AI. They need to understand its limits. They need a way to provide feedback when it gets things wrong. Trust is fragile; it’s built slowly through consistency and lost quickly through opacity.

Scalable Roadmaps & Governance

You won’t get the design right on day one. You need a governance structure that lets you evolve safely: deciding what to automate next, how to prioritise competing demands, how to retire workflows that no longer make sense. This prevents chaos and keeps stakeholders aligned.

Ethics & Risk Framework

AI amplifies both good decisions and bad ones. You need a principled way to think about fairness, transparency, privacy, and the second-order harms your system might cause. This isn’t compliance theatre; it’s how you build something your company can stand behind.

Conclusion

Enterprise AI capability isn’t built by copying this framework wholesale. It’s built by understanding what you’re aiming for, then moving deliberately towards it.

The framework shows what mature looks like. It’s comprehensive for a reason - each layer solves real problems that arise in production, and removing any of them creates a brittle system that either fails hard or requires heroic individual effort to keep alive.

But you don’t need all of it on day one.

What you do need: a clear intent to build towards it, and the discipline to learn by experimenting safely.

Experimentation is how you unlock these capabilities without betting the company. You take one bounded workflow. You build the minimum version of this framework that lets you run it - audit trails, rollback capacity, clear escalation. You measure whether it actually works. You iterate. You slow down when you discover gaps instead of building around them.

What you’re building towards, through this deliberate process, are systems that are:

- Reliable: they work consistently, they fail gracefully, they can be improved without chaos.

- Transparent: people understand what’s happening, why, and when to trust the result.

- Governed: decisions are deliberate, not accidental; choices are made by humans with accountability.

- Sustainable: the system doesn’t require heroic individual effort or break when people leave.

This is how organisations move from “we tried AI once” to “AI is how we work now.”

The framework is the vision. Experimentation is how you get there. Those four qualities are what success actually looks like.

In the next piece, we’ll map that journey: what truly blocks you, what you learn by doing, and how to sequence your way from learning to capability.